Deep Learning

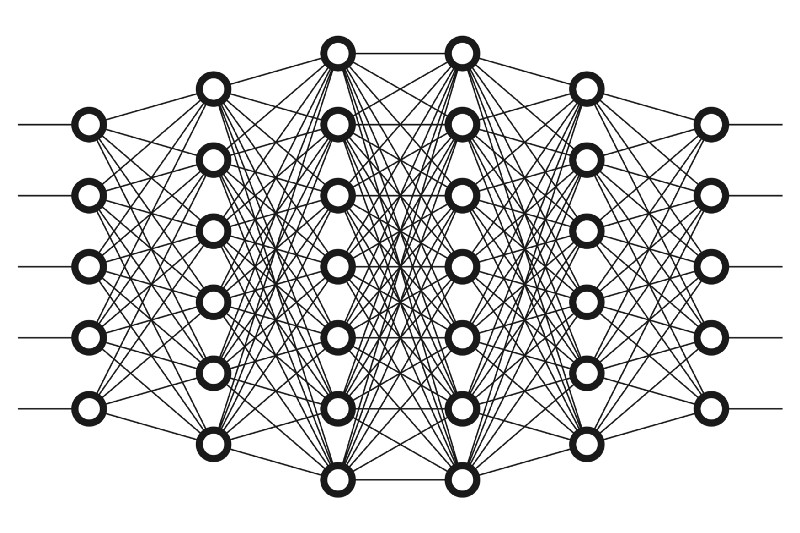

Deep Learning is a subcategory of Machine Learning. It is based on the use of multi-layered artificial neural networks to learn and extract features from data.

Deep Learning is used in various applications, such as computer vision, natural language processing (NLP), machine translation, fraud detection, object identification, among others. Due to its ability to learn autonomously and its high accuracy in identifying complex patterns in data, Deep Learning has become a key technique at the origin of the latest advances in AI.

How Deep Learning works

The neural networks used in deep learning attempt to emulate the behavior of the human brain, allowing systems to “learn” from large amounts of data. They are composed of multiple layers of interconnected neurons, each of which processes a portion of the data. The extracted features are used in subsequent layers to extract more complex features. This process is repeated at each layer until the most abstract features are extracted from the data set.

The term “deep” in deep learning refers to the use of multiple layers in the network. Although a neural network with a single layer can already make approximate predictions, additional hidden layers help to optimize and refine accuracy.

During the training phase, learning is achieved through a process called back propagation, where the model adjusts its internal parameters – the weights and biases of the neurons – to minimize the error in its predictions. Each neuron in the network has a weight that influences the strength of the signal passing through it and a bias that allows the output to be adjusted.

The model makes a prediction, and then compares this prediction with the correct answer to calculate the error. This error is used to make adjustments to the neuron weights so that in the next iteration the prediction is more accurate. The adjustments are calculated using an optimization algorithm, such as gradient descent, which modifies the weights in the direction that reduces the error.

This process is repeated with many training examples and thousands, or millions, of epochs in the network, thus progressively adjusting the features and patterns that are relevant to the task at hand.

Over time and after numerous iterations, the neural network “learns” to perform the task with increasing accuracy, adjusting its internal parameters to respond effectively to data it has never seen before. This process is what makes Deep Learning particularly powerful for tasks that involve large volumes of data and for which explicit rule programming would be impractical or impossible.

Deep Learning applications in business environment

Deep Learning is transforming multiple industries with its ability to extract valuable insights and automate complex tasks. Here are some of the main uses of Deep Learning in the business environment:

- Image Recognition: in the retail sector, it is used to identify products and analyze customer behavior through visual recognition in physical stores. It is also key in automated quality inspection in manufacturing (fault detection).

- Natural Language Processing (NLP): companies in all sectors use NLP for translations, sentiment analysis, and information extraction from legal and technical documents.

- Time Series Forecasting: in finance, it is used to predict market movements and in the supply chain for demand forecasting and inventory optimization.

- Voice Recognition and Virtual Assistants: used by telecommunications and technology companies to improve customer interaction and automate call center responses.

- Biometric Authentication: security and financial services companies use facial or fingerprint recognition for secure user authentication.

- Autonomous Vehicles: in logistics and transportation, Deep Learning facilitates the development of autonomous driving systems to improve efficiency and safety.

- Predictive Analytics and Maintenance: in heavy industry and manufacturing, it is used to predict equipment failures and schedule preventive maintenance, reducing downtime and operating costs.

- Personalization and Recommendations: e-commerce companies and streaming services use Deep Learning algorithms to personalize product and content recommendations, improving user experience and increasing sales.

- Social Sentiment Analysis: Brands use Deep Learning to monitor and analyze opinions and trends on social networks, allowing them to manage brand reputation and adapt marketing strategies.

- Fraud Detection: in the financial sector, it is used to detect suspicious patterns and prevent fraud in transactions in real time.

Main Deep Learning techniques

In the field of Deep Learning, several neural network techniques have been developed for different types of tasks. Here is a description of some of the most important techniques:

- Autoencoders: Autoencoders are Deep Learning models that capture the essence of the data. They are designed to compress the input information into a smaller representation and then reconstruct it as faithfully as possible. This capability makes them ideal for dimensionality reduction and anomaly detection in complex data sets.

- Autoregressive models: in the context of Deep Learning, autoregressive models learn to predict future entries of a sequence based on previous observations. They are crucial in time series analysis and can be used to predict stock prices, weather patterns and market trends.

- Sequence-to-Sequence Models: These models transform input sequences into output sequences, being fundamental in tasks such as machine translation. They work by encoding an input sequence into a latent state and then decoding that state into a new sequence.

- Convolutional Neural Networks (CNNs): CNNs are specialists in understanding images, applying filters that detect visual features at different levels of abstraction, allowing them to perform image recognition and object detection tasks with high accuracy.

- Recurrent Neural Networks (RNNs): are models that excel in handling sequential data, such as spoken or written language. RNNs can use their internal memory to process sequences of information, which makes them suitable for speech recognition and text generation.

- Long Short-Term Memory Networks (LSTMs): an enhancement of RNNs, LSTMs are designed to remember information for extended periods of time and are very effective in tasks that require understanding complex contexts in the input data.

- Generative Adversarial Networks (GANs): GANs consist of two networks, a generator and a discriminator, which are trained together. The generative network creates data that the discriminative network attempts to classify as real or fake, resulting in the generation of realistic images and other types of data.

- Transformers: Transformer models have revolutionized NLP with their ability to handle sequences of data. They use attention mechanisms that allow them to consider the full context of the input data, which improves comprehension and language generation.

- Vision Transformers (ViTs): a recent innovation that applies the architecture of transformers, known from language processing, to image analysis. ViTs divide images into a sequence of patches and process them using self-perpetuating mechanisms to capture contextual relationships between them. This methodology allows ViTs to learn powerful and complex visual representations, making them particularly effective in large-scale image classification and understanding tasks.

Computer vision (artificial vision)

Computer Vision techniques are methods that enable machines to acquire, process, analyze and understand digital images, and extract useful information from them. They are used in a wide variety of applications, such as industrial automation, autonomous vehicles, medicine and facial recognition.

Some of the most advanced techniques in this field include:

- Image classification: Allows machines to view an image and classify to which class it belongs.

- Object detection: Consists of identifying and locating specific objects in an image.

- Semantic segmentation: Allows dividing an image into regions and assigning a semantic label to each region.

- Instance segmentation: Similar to semantic segmentation, but distinguishes between different instances of the same class.

There are different types of neural networks used in computer vision, each with specialized architectures and applications. The most common are Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN) and more recently Vision Transformers.

Natural Language Processing (NLP)

Natural Language Processing (NLP) is a branch of artificial intelligence that enables computers to understand, interpret and manipulate human language. It uses models of computational linguistics, machine learning and deep learning to process human language in the form of text or speech data and “understand” its full meaning, along with the intent and sentiment of the speaker or writer.

Some of the most common applications of NLP include language translation, text generation, sentiment analysis, information extraction, and question answering. NLP is used in a wide variety of fields, including medicine, e-commerce, customer service, security and entertainment.

Transformer models, such as BERT and GPT-3, have been a major breakthrough in NLP in recent years. These models use attentional mechanisms and large volumes of data to learn to predict and generate language with broader and more accurate context. Their ability to fit specific tasks, even with relatively small amounts of labeled data, has significantly improved the efficiency and effectiveness of NLP-based applications.

Limitations and challenges of Deep Learning

Deep Learning has made significant advances in fields such as voice and image recognition, machine translation and text generation, among others. However, its use is not without its challenges:

- Need for Large Amounts of Data: Deep Learning models generally require huge data sets for training, which can be an obstacle if data is scarce or expensive to obtain.

- Computational Cost: Training deep neural networks is computationally intensive, which can involve high infrastructure and energy costs, and its environmental impact.

- Overfitting: There is a risk that models overfit the training data and therefore do not generalize well to new data.

- Interpretability and Explainability: Deep Learning models are often considered “black boxes” because their internal decision processes are not easily interpretable by humans.

- Feature Dependency: The quality of learning is highly dependent on the representation of the input data, which may require sophisticated and specialized feature engineering.

- Bias: Models can perpetuate or even exacerbate biases present in training data, leading to unfair or unethical responses.

- Security and Vulnerability: Deep Learning models can be susceptible to adversarial attacks, where small alterations in the input data can cause large errors in the output.

- Privacy Issues: The collection and use of large amounts of personal data to train models can raise privacy and compliance issues.